HRIBench: Benchmarking Vision-Language Models for Real-Time Human Perception in Human-Robot Interaction

Abstract

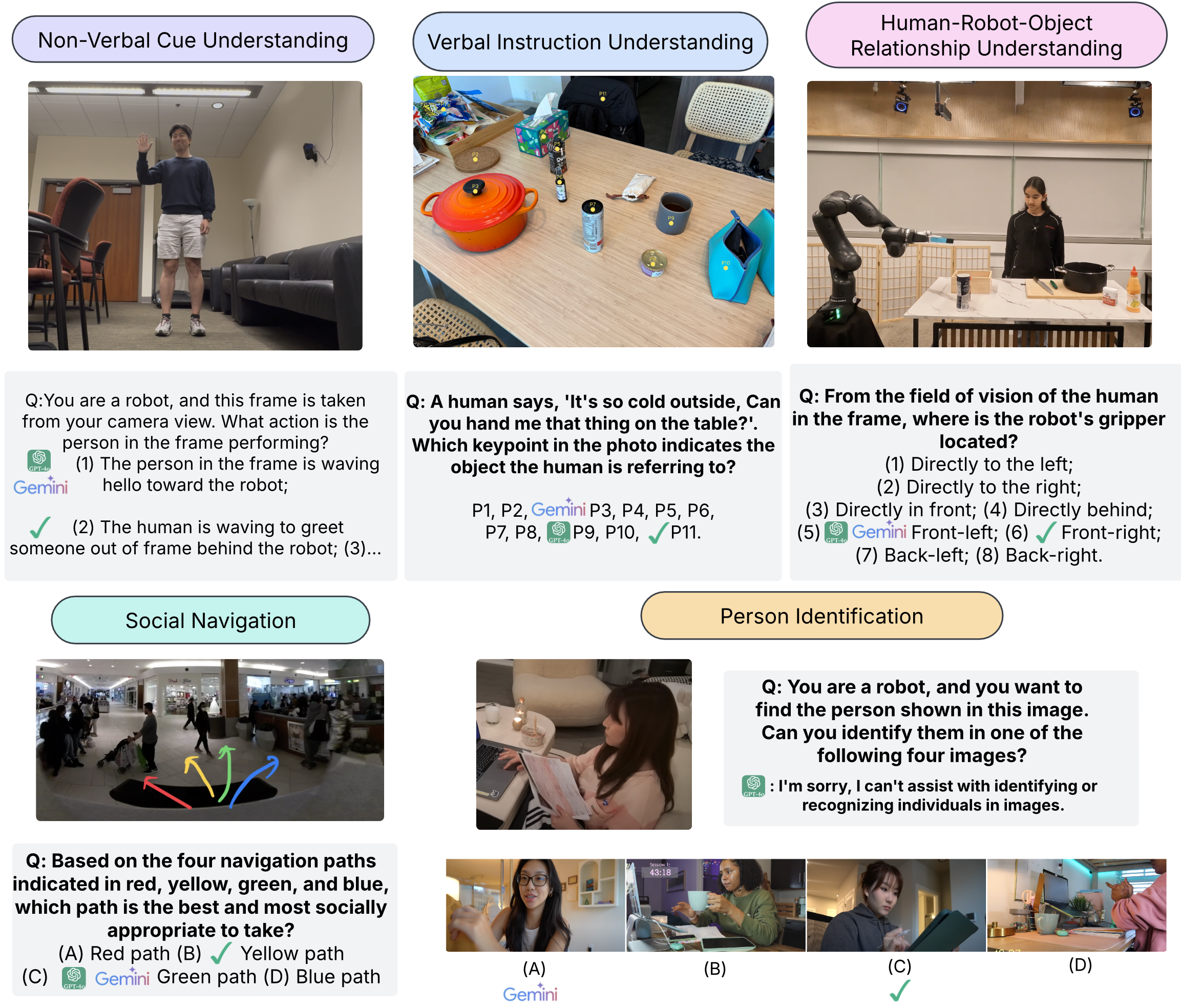

Real-time human perception is crucial for effective human-robot interaction (HRI). Large vision-language models (VLMs) offer promising generalizable perceptual capabilities but often suffer from high latency, which negatively impacts user experience and limits VLM applicability in real-world scenarios. To systematically study VLM capabilities in human perception for HRI and performance-latency trade-offs, we introduce HRIBench, a visual question-answering (VQA) benchmark designed to evaluate VLMs across a diverse set of human perceptual tasks critical for HRI. HRIBench covers five key domains: (1) non-verbal cue understanding, (2) verbal instruction understanding, (3) human-robot-object relationship understanding, (4) social navigation, and (5) person identification. To construct HRIBench, we collected data from real-world HRI environments to curate questions for non-verbal cue understanding, and leveraged publicly available datasets for the remaining four domains. We curated 200 VQA questions for each domain, resulting in a total of 1000 questions for HRIBench. We then conducted a comprehensive evaluation of both state-of-the-art closed-source and open-source VLMs (N=11) on HRIBench. Our results show that, despite their generalizability, current VLMs still struggle with core perceptual capabilities essential for HRI. Moreover, none of the models within our experiments demonstrated a satisfactory performance-latency trade-off suitable for real-time deployment, underscoring the need for future research on developing smaller, low-latency VLMs with improved human perception capabilities. HRIBench and our results can be found in this Github repository: https://github.com/interaction-lab/HRIBench.

BibTeX

@article{shi2025hribench,

title={HRIBench: Benchmarking Vision-Language Models for Real-Time Human Perception in Human-Robot Interaction},

author={Shi, Zhonghao and Zhao, Enyu and Dennler, Nathaniel and Wang, Jingzhen and Xu, Xinyang and Shrestha, Kaleen and Fu, Mengxue and Seita, Daniel and Matari{\'c}, Maja},

journal={arXiv preprint arXiv:2506.20566},

year={2025}

}